January 2026 Maintenance - Introducing Phoenix Scratch

This post summarizes the updates and improvements deployed during the January 2026 maintenance window. Changes to Sol were minimal, while Phoenix received a major enhancement with the introduction of Phoenix Scratch, a new high performance scratch storage system. Together, these updates improve performance, security, and overall usability across the clusters.

Introducing Phoenix Scratch

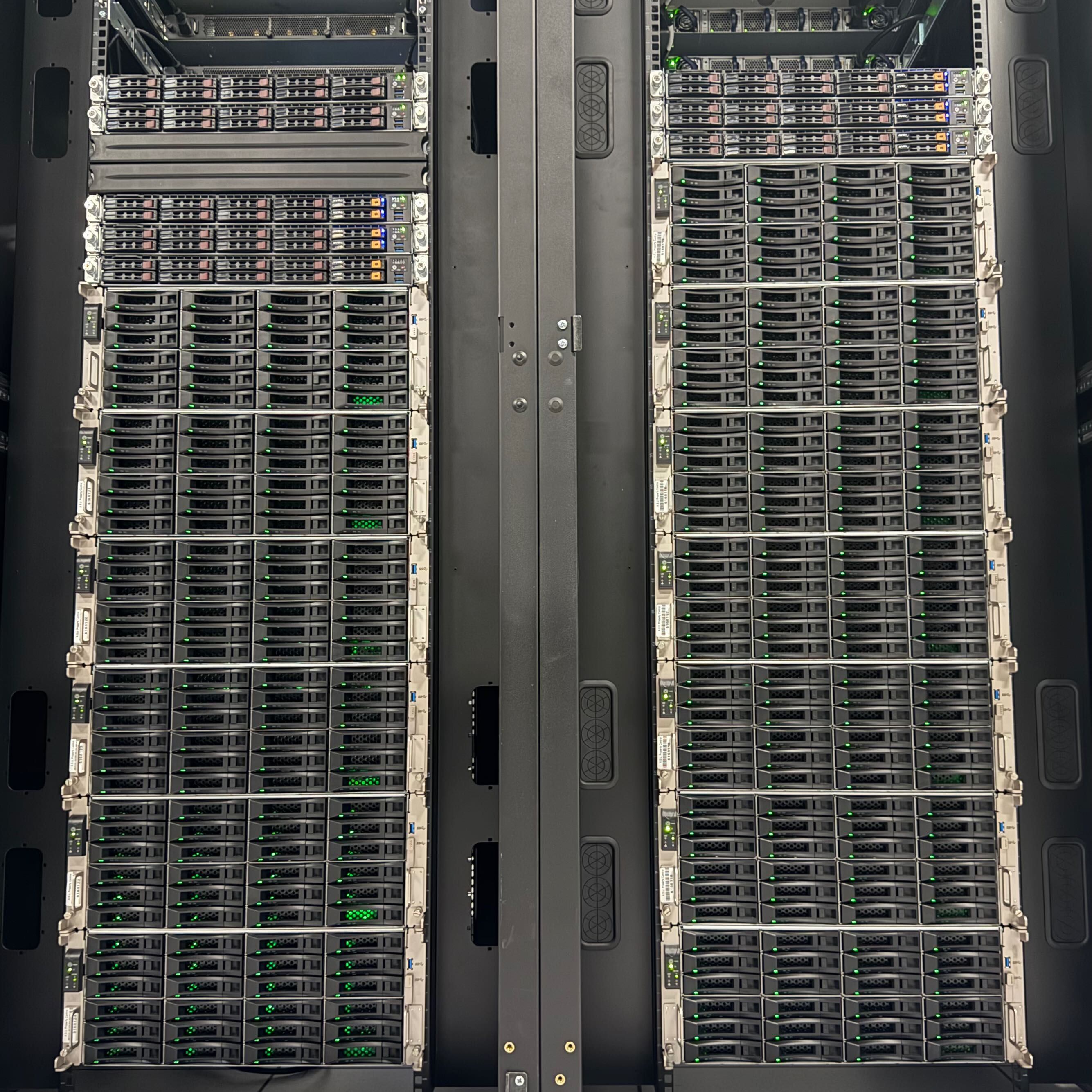

Two racks housing the storage and networking infrastructure for Phoenix Scratch in the Iron Mountain Data Center.

We are pleased to announce the availability of Phoenix Scratch, a new high performance parallel scratch filesystem designed to support data intensive workloads on Phoenix.

- 3 PiB of shared storage space

- Directly mountable on InfiniBand, Omni-Path, and Ethernet fabrics

- High throughput and low latency for I/O intensive applications

- Parallel file system support for seamless integration with existing workflows

- NVMe backed metadata for improved responsiveness

- Policy based quotas for flexible and fair storage management

As with Sol Scratch, Phoenix Scratch is intended for temporary storage only. It is well suited for active job data, checkpoints, and intermediate results, but it should not be used for long term data retention. The automatic 90 day data retention policy applies to both Phoenix and Sols. Users should ensure that important data is backed up to appropriate long term storage systems.

Behind the Build

Bringing Phoenix Scratch online required significant infrastructure work, including over 800 meters of cabling and the installation of 507 HDDs, 60 SSDs, and 12 NVMe drives across 19 servers. The system is composed of three primary components:

Metadata Servers (MDS): Six high performance servers dedicated to metadata operations, each equipped with NVMe drives. These systems were built using nodes donated by Cirrus Logic and customized to support NVMe storage with drives donated by Intel.

Object Storage Servers (OSS): Thirteen storage servers providing the bulk data capacity and throughput. These systems use a mix of HDDs and SSDs and repurpose hardware previously deployed as the Cholla Storage System.

Networking: Each storage node is connected via 100 Gb Omni-Path, 100 Gb InfiniBand, and dual 40 Gb Ethernet links, ensuring high bandwidth and low latency access regardless of interconnect.

We extend our sincere thanks to our partners at Intel and Cirrus Logic for their generous hardware contributions, which made Phoenix Scratch possible. We also thank our researchers for their patience while Phoenix continued to operate during the deployment and integration of this new filesystem.

Transferring Data to Phoenix Scratch

Globus is the recommended method for transferring data to and from Phoenix Scratch. We have created a Globus collection specifically for Phoenix Scratch. For more information, see our documentation on transferring data between supercomputers.

Technical Updates

Infrastructure and Firmware

- Duo 2FA Enabled for password-based SSH. More information can be found in the Duo 2FA Documentation.

- Slurm upgraded to

25.11.1on Sol and Phoenix. - Web portals updated to

4.0.8on Sol and Phoenix. - Swap enabled on Sol and Phx login nodes to improve stability during high memory usage.

- InfiniBand fabrics separated for Sol and Phoenix to improve performance and stability.

- OmniPath fabric managers updated to 12.0.1

- Updated Horizon project storage with latest patch release

- Upgraded Hypervisors to latest stable release.

- Load-balancing infrastructure updated to improve both security and reliability..